Three Key Considerations for Mental Health Tools

Adapted from the congressional testimony of Dr. Mitch Prinstein, Chief Science Officer, American Psychological Association (APA)

As AI continues to reshape how we deliver and experience mental health support, one truth must remain at the center: technology should serve human well-being, not compromise it.

Dr. Mitch Prinstein of the American Psychological Association outlined essential commitments for developers, policymakers, and organizations building AI-driven mental health tools. Below are three considerations for human-centered innovation—rooted in transparency, privacy, and equity.

1. Transparency and Ethical Interaction

Build trust through honesty and accountability.

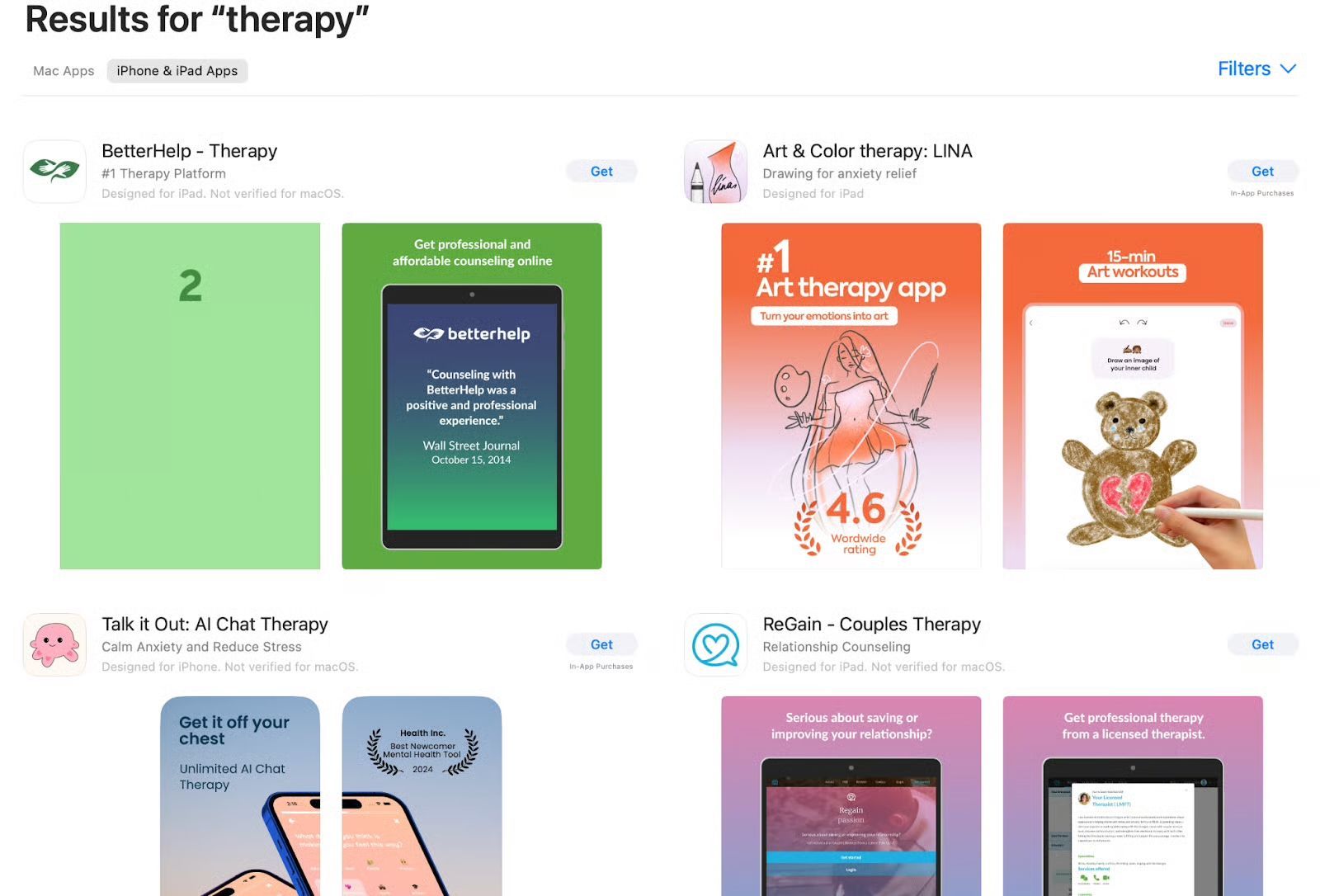

AI systems should never misrepresent themselves as human—or as licensed professionals like psychologists or therapists. Transparency helps users understand the boundaries of AI and reinforces the essential role of real human connection in care.

- Disclose clearly and persistently when users are interacting with AI.

- Make training data auditable to identify bias and ensure accountability.

- Keep humans “in the loop” for any decisions involving mental health.

Ethical Guardrail: Harm Reduction.

Transparency prevents misinformation and protects users from undue reliance on AI for sensitive emotional support or crisis decisions.

2. Privacy and Protection by Design

Protecting users—especially young people—must be the default, not the exception.

Children and adolescents are particularly vulnerable to manipulative design, exposure to bias, and developmental harm. Companies must take proactive steps to ensure safety and privacy across all digital touchpoints.

- Conduct independent pre-deployment testing for developmental safety with development psychology experts.

- Enforce “safe-by-default” settings that prioritize privacy and minimize persuasive or addictive design.

- Prohibit the sale or use of minors’ data for commercial purposes.

- Safeguard biometric and neural data, “including emotional and mental state information.”

Ethical Guardrail: Privacy.

Every mental health tool must respect users’ autonomy and confidentiality—especially when dealing with personal or biometric data. Privacy is not just a compliance box; it’s an ethical obligation.

3. Research, Equity, and Accountability

Commit to long-term learning and equitable outcomes.

AI development should never outpace our understanding of its impact. Responsible innovation means continuously studying who benefits and who might be harmed by these systems.

- Fund independent, publicly accessible, long-term research on AI’s effects on mental health, especially in youth populations.

- Enable researcher access to data for unbiased studies.

- Prioritize equity in design by incorporating psychological experts ensuring AI systems work across diverse populations without amplifying discrimination or bias.

Ethical Guardrail: Equity.

AI must be trained, tested, and refined with inclusivity in mind. Equal access, representation, and protection are non-negotiable for ethical AI in mental health.

The Bottom Line

Technology can expand access to care, but it can also amplify harm if ethics aren’t embedded at every step.

APA’s Health Advisory on AI Chatbots and Wellness Apps also offers insights into how AI tools can be designed to protect vulnerable populations and reduce disparities in digital mental and behavioral health.

By prioritizing transparency, privacy, and equity, we ensure that innovation in mental health technology remains human-centered, developmentally informed, and psychologically safe.

👉 Download the

Ethical Guardrails checklist to help assess whether your digital mental and behavioral health tool aligns with three core principles: transparency, privacy, and equity.

Based on the

congressional testimony of Dr. Mitch Prinstein, Chief Science Officer, American Psychological Association (APA).

Read more insights from APA Labs